Priority of Bug

Posted On 08 December, 2008 at 9:51 PM by Rajeev PrabhakaranImmediate Priority: The bug is of immediate priority if it blocks further testing and is very visible

At the earliest Priority: The bug must be fixed at the earliest before the product is released

Normal Priority: The bug should be fixed if time permits

Later Priority: The bug may be fixed, but can be released as it is

Severity of Bug

Posted On at 9:47 PM by Rajeev PrabhakaranCritical severity: The bug is of critical severity if it causes system crash, data loss or data corruption.

Major severity: The bug is of major severity if it causes operational errors, wrong results and loss of functionality.

Minor severity: The bug is of minor severity if it causes defect in user interface layout or spelling mistakes.

Black Box and Functional Testing

Posted On 07 December, 2008 at 10:07 PM by Rajeev PrabhakaranBlack Box Testing assumes that the tester does not know anything about the application that is going to be tested. The tester needs to understand what the program should do, and this is achieved through the business requirements and meeting and talking with users.

Functional testing: This type of tests will evaluate a specific operating condition using inputs and validating results. Functional tests are designed to test boundaries. A combination of correct and incorrect data should be used in this type of test.

Scalability and Performance Testing

Posted On at 2:15 PM by Rajeev PrabhakaranPerformance testing is designed to measure how quickly the program completes a given task. The primary objective is to determine whether the processing speed is acceptable in all parts of the program. If explicit requirements specify program performance, then performance test are often performed as acceptance tests.

As a rule, performance tests are easy to automate. This makes sense above all when you want to make a performance comparison of different system conditions while using the user interface. The capture and automatic replay of user actions during testing eliminates variations in response times.

This type of test should be designed to verify response and execution time. Bottlenecks in a system are generally found during this stage of testing.

System Testing Check List

Posted On 05 December, 2008 at 6:46 PM by Rajeev Prabhakaran- Run time behavior on various operating system or different hardware configurations.

- Install ability and configure ability on various systems

- Capacity limitation (maximum file size, number of records, maximum number of concurrent users, etc.)

- Behavior in response to problems in the programming environment (system crash, unavailable network, full hard-disk, printer not ready)

- Protection against unauthorized access to data and programs

Web Testing- Functional System Testing

Posted On at 6:44 PM by Rajeev PrabhakaranSystem tests check that the software functions properly from end-to-end. The components of the system include: A database, Web-enable application software modules, Web servers, Web-enabled application frameworks deploy Web browser software, TCP/IP networking routers, media servers to stream audio and video, and messaging services for email. A common mistake of test professionals is to believe that they are conducting system tests while they are actually testing a single component of the system. For example, checking that the Web server returns a page is not a system test if the page contains only a static HTML page

Web Testing- HTML Content Testing and Validation

Posted On at 6:40 PM by Rajeev PrabhakaranHTML content checking tests makes a request to a Web page, parses the response for HTTP hyperlinks, requests hyperlinks from their associated host, and if the links returned successful or exceptional conditions. The downside is that the hyperlinks in a Web-enabled application are dynamic and can change, depending on the user's actions. There is little way to know the context of the hyperlinks in a Web-enabled application. Just checking the links' validity is meaningless if not misleading. These tests were meant to test static Web sites, not Web-enabled application

Web Testing- Click-Stream Measurement Testing

Posted On at 6:38 PM by Rajeev PrabhakaranMakes a request for a set of Web pages and records statistics about the response; including total page views per hour, total hits per week, total user sessions per week, and derivatives of these numbers. The downside is that if your Web-enabled application takes twice as many pages as it should for a user to complete his or her goal; the click stream test makes it look as though your Web site is popular, while to the user your Web site is frustrating

Web Testing- Online Help Testing

Posted On at 6:34 PM by Rajeev PrabhakaranOnline help tests check the accuracy of help contents, correctness of features in the help system, and functionality of the help system

Web Testing- Click-Stream Testing

Posted On at 6:29 PM by Rajeev PrabhakaranClick stream Testing is to show which URLs the user clicked, The Web site's user activity by time period during the day, and other data otherwise found in the Web server logs. Popular choice for Click-Stream Testing statistics include Keynote Systems Internet weather report, Web Trends log analysis utility, and the Net Mechanic monitoring service.

Disadvantage: Click-Stream Testing statistics reveal almost nothing about the user's ability to achieve their goals using the Web site. For example, a Web site may show a million page views, but 35% of the page views may simply e pages with the message "Found no search results," With Click-Stream Testing, there's no way to tell when user reach their goals

Web Testing- Security Testing

Posted On at 6:27 PM by Rajeev PrabhakaranSecurity measures protect Web systems from both internal and external threats. E-commerce concerns and the growing popularity of Web-based applications have made security testing increasingly relevant. Security tests determine whether a company's security policies have been properly implemented; they evaluate the functionality of existing systems, not whether the security policies that have been implemented are appropriate

Primary task in security testing over web applications

- Application software

- Database

- Servers

- Client workstations

- Networks

Web Testing - External Beta Testing

Posted On at 6:25 PM by Rajeev PrabhakaranExternal beta testing offers developers their first glimpse at how users may actually interact with a program. Copies of the program or a test URL, sometimes accompanied with letter of instruction, are sent out to a group of volunteers who try out the program and respond to questions in the letter. Beta testing is black-box, real-world testing. Beta testing can be difficult to manage, and the feedback that it generates normally comes too late in the development process to contribute to improved usability and functionality. External beta-tester feedback may be reflected in a future releases.

Web Testing - User Interface Testing

Posted On at 6:23 PM by Rajeev PrabhakaranEasy of use, User Interface testing evaluates how intuitive a system is. Issues pertaining to navigation, usability, commands, and accessibility are considered. User interface functionality testing examines how well a UI operates to specifications

Areas Covered in User Interface Testing

- Usability

- Look and feel

- Navigation controls and navigation bar

- Instructional and technical information style

- Images

- Tables

- Navigation branching

- Accessibility

Install and uninstallation Testing Tips

Posted On at 6:21 PM by Rajeev PrabhakaranWeb system often requires both client-side and server-side installs. Testing of the installer checks that installed features function properly--including icons, support documentation, the README file, and registry keys. The test verifies that the correct directories are created and that the correct system files are copied to the appropriate directories. The test also confirms that various error conditions are detected and handled gracefully.

Testing of the uninstaller checks that the installed directories and files are appropriately removed, that configuration and system-related files are also appropriately removed or modified, and that the operating environment is recovered in its original state.

Compatibility and Configuration Testing

Posted On at 6:20 PM by Rajeev PrabhakaranPerform to check that an application functions properly across various hardware and software environments by using Compatibility and configuration testing. Often, the strategy is to run the functional acceptance simple tests or a subset of the task-oriented functional tests on a range of software and hardware configurations. Sometimes, another strategy is to create a specific test that takes into account the error risks associated with configuration differences. For example, you might design an extensive series of tests to check for browser compatibility issues. Software compatibility configurations include variances in OS versions, input/output (I/O) devices, extension, network software, concurrent applications, online services and firewalls. Hardware configurations include variances in manufacturers, CPU types, RAM, graphic display cards, video capture cards, sound cards, monitors, network cards, and connection types(e.g. T1, DSL, modem, etc.)

Exploratory Testing

Posted On at 6:19 PM by Rajeev PrabhakaranExploratory Testing do not involve a test plan, checklist, or assigned tasks. The strategy here is to use past testing experience to make educated guesses about places and functionality that may be problematic. Testing is then focused on those areas. Exploratory testing can be scheduled. It can also be reserved for unforeseen downtime that presents itself during the testing process

Real world User level Testing

Posted On at 6:19 PM by Rajeev PrabhakaranMissed errors by formal test cases are found by using this method of testing. These tests simulate the actions customers may take with a program

Forced Error Testing

Posted On at 6:18 PM by Rajeev PrabhakaranThe forced-error test (FET) consists of negative test cases that are designed to force a program into error conditions. A list of all error messages that the program issues should be generated. The list is used as a baseline for developing test cases. An attempt is made to generate each error message in the list. Obviously, test to validate error-handling schemes cannot be performed until all the handling and error message have been coded. However, FETs should be thought through as early as possible. Sometimes, the error messages are not available. The error cases can still be considered by walking through the program and deciding how the program might fail in a given user interfaces such as a dialog or in the course of executing a given task or printing a given report. Test cases should be created for each condition to determine what error message is generated.

Task Oriented Functional Testing

Posted On at 5:57 PM by Rajeev PrabhakaranThe task-oriented functional test (TOFT) consists of positive test cases that are designed to verify program features by checking the task that each feature performs against specifications, user guides, requirements, and design documents. Usually, features are organized into list or test matrix format. Each feature is tested for:

- The validity of the task it performs with supported data conditions under supported operating conditions.

- The integrity do the task's end result

- The feature's integrity when used in conjunction with related features

Deployment Acceptance Testing

Posted On at 5:56 PM by Rajeev PrabhakaranThe configuration on which the Web system will be deployed will often be much different from develop-and-test configurations. Testing efforts must consider this in the preparation and writing of test cases for installation time acceptance tests. This type of test usually includes the full installation of the applications to the targeted environments or configurations

Functional Acceptance Simple Test

Posted On at 5:55 PM by Rajeev PrabhakaranThe functional acceptance simple test (FAST) is run on each development release to check that key features of the program are appropriately accessible and functioning properly on the at least one test configuration (preferable the minimum or common configuration).This test suite consists of simple test cases that check the lowest level of functionality for each command- to ensure that task-oriented functional tests (TOFTs) can be performed on the program. The objective is to decompose the functionality of a program down to the command level and then apply test cases to check that each command works as intended. No attention is paid to the combination of these basic commands, the context of the feature that is formed by these combined commands, or the end result of the overall feature. For example, FAST for a File/Save As menu command checks that the Save As dialog box displays. However, it does not validate that the overall file-saving feature works nor does it validate the integrity of save files.

Release Acceptance Test

Posted On at 5:54 PM by Rajeev PrabhakaranThe release acceptance test (RAT), also referred to as a build acceptance or smoke test, is run on each development release to check that each build is stable enough for further testing. Typically, this test suite consists of entrance and exit test cases plus test cases that check mainstream functions of the program with mainstream data. Copies of the RAT can be distributed to developers so that they can run the tests before submitting builds to the testing group. If a build does not pass a RAT test, it is reasonable to do the following:

- Suspend testing on the new build and resume testing on the prior build until another build is received

- Report the failing criteria to the development team

- Request a new build

Types of Testing Comes Under Testing Levels

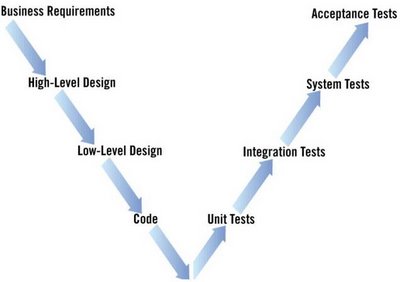

Posted On at 5:48 PM by Rajeev Prabhakaran1. Unit Testing

- Unit Testing is primarily carried out by the developers themselves

- Deals functional correctness and the completeness of individual program units

- White box testing methods are employed

2. Integration Testing

- Integration Testing: Deals with testing when several program units are integrated

- Regression testing: Change of behavior due to modification or addition is called ‘Regression’. Used to bring changes from worst to least

- Incremental Integration Testing: Checks out for bugs which encounter when a module has been integrated to the existing

- Smoke Testing: It is the battery of test which checks the basic functionality of program. If fails then the program is not sent for further testing

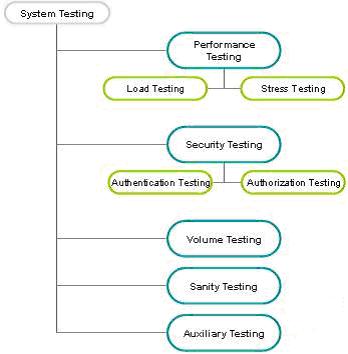

3. System Testing

- System Testing : Deals with testing the whole program system for its intended purpose

- Recovery testing: System is forced to fail and is checked out how well the system recovers the failure

- Security Testing: Checks the capability of system to defend itself from hostile attack on programs and data

- Load & Stress Testing: The system is tested for max load and extreme stress points are figured out

- Performance Testing: Used to determine the processing speed

- Installation Testing: Installation & uninstallation is checked out in the target platform

4. Acceptance Testing

- UAT: ensures that the project satisfies the customer requirements

- Alpha Testing : It is the test done by the client at the developer’s site

- Beta Testing : This is the test done by the end-users at the client’s site

- Long Term Testing : Checks out for faults occurrence in a long term usage of the product

- Compatibility Testing : Determines how well the product is substantial to product transition

Benefits of Automated Testing

Posted On at 5:46 PM by Rajeev Prabhakaran- Fast - Automated tools runs tests significantly faster than human users.

- Reliable - Tests perform precisely the same operations each time they are run, thereby eliminating human error.

- Repeatable - The tester can test how the Web site or application reacts after repeated execution of the same operations.

- Programmable - The tester can program sophisticated tests that bring out hidden information.

- Comprehensive - The tester can build a suite of tests that covers every feature in your Web site or application.

- Reusable - The tester can reuse tests on different versions of a Web site or application, even if the user interface changes.

Automated Testing

Posted On at 5:35 PM by Rajeev PrabhakaranAutomated testing is as simple as removing the "Manual Effort" and letting the computer do the thinking. This can be done with integrated debug tests, to much more intricate processes. The idea of the tests is to find bugs that are often very challenging or time intensive for human testers to find. This sort of testing can save many man hours and can be more "efficient" in some cases. But it will cost more to ask a developer to write more lines of code into the game (or an external tool) then it does to pay a tester and there is always the chance there is a bug in the bug testing program. Re-usability is another problem; you may not be able to transfer a testing program from one title (or platform) to another. And of course, there is always the "human factor" of testing that can never truly be replaced.

Other successful alternatives or variation: Nothing is infallible. Realistically, a moderate split of human and automated testing can rule out a wider range of possible bugs, rather than relying solely on one or the other. Giving the testers limited access to any automated tools can often help speed up the test cycle.

Testing Methods

Posted On at 5:33 PM by Rajeev Prabhakaran1.White Box

White box testing is also called ‘Structural Testing / Glass Box Testing’ is used for testing the code keeping the system specs in mind. Inner working is considered and thus Developers Test.

- Mutation Testing-Number of mutants of the same program created with minor changes and none of their result should coincide with that of the result of the original program given same test case.

- Basic Path Testing-Testing is done based on Flow graph notation, uses Cyclops metric complexity & Graph matrices.

- Control Structure Testing-The Flow of control execution path is considered for testing. It does also checks :-

- Conditional Testing : Branch Testing, Domain Testing

- Data Flow Testing

- Loop testing: Simple, Nested, Conditional, Unstructured Loops

2. Gray Box

Similar to Black box but the test cases, risk assessments, and test methods involved in gray box testing are developed based on the knowledge of the internal data and flow structures

3. Black Box

Also called ‘Functional Testing’ as it concentrates on testing of the functionality rather than the internal details of code.

Test cases are designed based on the task descriptions

- Comparison Testing-Test cases results are compared with the results of the test Oracle.

- Graph Based Testing-Cause and effect graphs are generated and cyclometric complexity considered in using the test cases.

- Boundary Value Testing-Boundary values of the Equivalence classes are considered and tested as they generally fail in Equivalence class testing.

- Equivalence class Testing-Test inputs are classified into Equivalence classes such that one input check validates all the input values in that class.

Project is not big enough to justify extensive testing

Posted On at 5:31 PM by Rajeev PrabhakaranConsider the impact of project errors, not the size of the project. However, if extensive testing is still not justified, risk analysis is again needed and the considerations listed under "What if there isn't enough time for thorough testing?" do apply. The test engineer then should do "AD HOC" testing, or write up a limited test plan based on the risk analysis.

Project is not big enough to justify extensive testing

Posted On at 5:28 PM by Rajeev PrabhakaranConsider the impact of project errors, not the size of the project. However, if extensive testing is still not justified, risk analysis is again needed and the considerations listed under "What if there isn't enough time for thorough testing?" do apply. The test engineer then should do "ad hoc" testing, or write up a limited test plan based on the risk analysis.

Requirements changing continuously?

Posted On at 5:26 PM by Rajeev PrabhakaranWork with management early on to understand how requirements might change, so that alternate test plans and strategies can be worked out in advance. It is helpful if the application's initial design allows for some adaptability, so that later changes do not require redoing the application from scratch. Additionally, try to-

- Ensure the code is well commented and well documented; this makes changes easier for the developers

- Use rapid prototyping whenever possible; this will help customers feel sure of their requirements and minimize changes

- In the project's initial schedule, allow for some extra time to commensurate with probable changes

What should be done after a bug is found?

Posted On at 5:25 PM by Rajeev PrabhakaranWhen a bug is found, it needs to be communicated and assigned to developers that can fix it. After the problem is resolved, fixes should be re-tested. Additionally, determinations should be made regarding requirements, software, hardware, safety impact, etc., for regression testing to check the fixes didn't create other problems elsewhere. If a problem-tracking system is in place, it should encapsulate these determinations. A variety of commercial, problem-tracking/management software tools are available. These tools, with the detailed input of software test engineers, will give the team complete information so developers can understand the bug, get an idea of its severity, reproduce it and fix it.

Test Case

Posted On at 5:24 PM by Rajeev PrabhakaranA test case is a document that describes an input, action, or event and its expected result, in order to determine if a feature of an application is working correctly. A test case should contain particulars such as a

a. Test case identifier; or Test Case ID

b. Test case name;

c. Objective; or Test Case Description

d. Test conditions/setup; or Steps

e. Input data requirements, or Actions

d. Expected result

e. Actual Result

f. Test Log or Test Case Status (Pass/Fail)

Please note, the process of developing test cases can help find problems in the requirements or design of an application, since it requires you to completely think through the operation of the application. For this reason, it is useful to prepare test cases early in the development cycle, if possible.

Test Plan

Posted On at 5:20 PM by Rajeev PrabhakaranA software project test plan is a document that describes the objectives, scope, approach and focus of a software testing effort. The process of preparing a test plan is a useful way to think through the efforts needed to validate the acceptability of a software product. The completed document will help people outside the test group understand the why and how of product validation. It should be thorough enough to be useful, but not so thorough that none outside the test group will be able to read it

What is a Good Design ?

Posted On at 5:19 PM by Rajeev PrabhakaranDesign could mean many things, but often refers to functional design or internal design. Good functional design is indicated by software functionality can be traced back to customer and end-user requirements. Good internal design is indicated by software code whose overall structure is clear, understandable, easily modifiable and maintainable; is robust with sufficient error handling and status logging capability; and works correctly when implemented

What is a Good Code ?

Posted On at 5:19 PM by Rajeev PrabhakaranA good code is code that works, is free of bugs and is readable and maintainable. Organizations usually have coding standards all developers should adhere to, but every programmer and software engineer has different ideas about what is best and what are too many or too few rules. We need to keep in mind that excessive use of rules can stifle both productivity and creativity. Peer reviews and code analysis tools can be used to check for problems and enforce standards

What is Quality ?

Posted On at 5:03 PM by Rajeev PrabhakaranQuality software is software that is reasonably bug-free, delivered on time and within budget, meets requirements and expectations and is maintainable. However, quality is a subjective term. Quality depends on who the customer is and their overall influence in the scheme of things. Customers of a software development project include end-users, customer acceptance test engineers, testers, customer contract officers, customer management, the development organization's management, test engineers, testers, salespeople, software engineers, stockholders and accountants. Each type of customer will have his or her own slant on quality. The accounting department might define quality in terms of profits, while an end-user might define quality as user friendly and bug free

An Inspection

Posted On at 5:02 PM by Rajeev PrabhakaranAn inspection is a formal meeting, more formalized than a walk-through and typically consists of 3-10 people including a moderator, reader (the author of whatever is being reviewed) and a recorder (to make notes in the document). The subject of the inspection is typically a document, such as a requirements document or a test plan. The purpose of an inspection is to find problems and see what is missing, not to fix anything. The result of the meeting should be documented in a written report. Attendees should prepare for this type of meeting by reading through the document, before the meeting starts; most problems are found during this preparation. Preparation for inspections is difficult, but is one of the most cost-effective methods of ensuring quality, since bug prevention is more cost effective than bug detection.

Testing for Localizable Strings (Separate String from Code)

Posted On at 4:59 PM by Rajeev PrabhakaranLocalizable strings are strings that are no longer hard coded and compiled directly into the programs executable files. To do so for tester is to create a pseudo build automatically or manually. A pseudo language build means is that the native language string files are run through a parser that changes them in some way so they can easily be identified by testing department. Next a build is created using these strings so they show up in the user interface instead of the English strings. This helps find strings that haven't been put into separate string files. Problems with displaying foreign text, and problems with string expansion where foreign strings will be truncated

Two environments to test for the program in Chinese

- In Chinese window system. (In China)

- In English Window system with Chinese language support (in USA)

Online help

- Accuracy

- Good reading

- Help is a combination of writing and programming

- Test hypertext links

- Test the index

- Watch the style

Some specific testing recommendations

- Make your own list of localization requirements

- Automated testing

The documentation tester's objectives

- Checking the technical accuracy of every word

- Lookout for confusions in the writing

- Lookout for missing features

- Give suggestions without justifying

Considering Localization Testing

Posted On at 4:59 PM by Rajeev PrabhakaranTesting resource files [separate strings from the code] Solution: create a pseudo build

String expansion: String size change breaking layout and alignment. When words or sentences are translated into other languages, most of the time the resulting string will be either longer or shorter than the native language version of the string. Two solutions to this problem:

a. Account the space needed for string expansion, adjusting the layout of your dialog accordingly

b. Separate your dialog resources into separate dynamic libraries.

Data format localization: European style: DD/MM/YY North American style: MM/DD/YY Currency, time and number format, address

Character sets: ASCII or Non ASCII Single byte character 16bit (US) 256 characters Double byte character 32bit (Chinese) 65535 code points

Encoding: Unicode: Unicode supports many different written languages in the world all in a single character encoding. Note: For double character set, it is better to convert from Unicode to UTF8 for Chinese, because UTF8 is a variable length encoding of Unicode that can be easily sent through the network via single byte streams

Builds and installer: Creating an environment that supports a single version of your code, and multiple version of the language files

Program’s installation, un-installation in the foreign machines

Testing with foreign characters: EXP: enter foreign text for user name and password. For entering European or Latin characters on Windows

a. Using Character Map tool

Search: star-program-accessories-accessibility-system tool-

b. Escape sequences. EXP: ALT + 128

For Asia languages use what is usually called an IME (input method editor)

Chinese: I use GB encoding with pin yin inputted mode

Foreign Keyboards or On-Screen keyboard

Text filters: Program that is used to collect and manipulate data usually provides the user with a mechanism for searching and filtering that data. As a global software tester, you need to make sure that the filtering and searching capabilities of your program work correctly with foreign text. Problem; ignore the accent marks used in foreign text

Loading, saving, importing, and exporting high and low ASCII

Asian text in program: how double character set work

Watch the style

Two environments to test for the program in Chinese

a. In Chinese window system (in China)

b. In English Window system with Chinese language support (in USA)

Microsoft language codes:

CHS - Chinese Simplified

CHT - Chinese Traditional(Taiwan)

ENU - English (United States)

FRA - French (France)

Java Languages codes

zh_CN - Chinese Simplified

zh_TW - Chinese Traditional(Taiwan)

Fr or fr_FR - French (France)

en or en_US - English (United States)

More need consider in localization testing:

* Hot key.

* Garbled in translation

* Error message identifiers

* Hyphenation rules

* Spelling rules

* Sorting rules

* Uppercase and lowercase conversion

Localization Aspects

Posted On at 4:52 PM by Rajeev PrabhakaranTerminology

Terminology The selection and definition as well as the correct and consistent usage of terms are preconditions for successful localization:

- Laymen and expert users

- In most cases innovative domains and topics

- Huge projects with many persons involved

- Consistent terminology throughout all products

- No synonyms allowed

- Predefined terminology (environment, laws, specifications, guidelines, corporate language)

Symbols

- Symbols are culture-dependent, but often they cannot be modified by the localizer

- Symbols are often adopted from other (common) spheres of life

- Symbols often use allusions (concrete for abstract); in some cases, homonyms or even homophones are used

Illustrations and Graphics

- Illustrations and graphics are very often culture dependant, not only in content but also in the way they are presented

- Illustrations and graphics should be adapted to the (technical) needs of the target market (screen shots, manuals)

- Illustrations and graphics often contain textual elements that must be localized, but cannot be isolated

Colors have different meanings in different cultures

Character sets

- Languages are based on different character sets ("alphabets")

- The localized product must be able to handle(display, process, sort etc) the needed character set

- "US English" character sets (1 byte, 7 bit or 8 bit)

- Product development for internationalization should be based on UNICODE (2 byte or 4 byte)

Fonts and typography

- Font types and font families are used in different cultures with varying frequency and for different text types and parts of text

- Example: In English manuals fonts with serifs (Times Roman) are preferred; In German manuals fonts without serifs (Helvetica) are preferred

- Example: English uses capitalization more frequently (e.g. CAUTION) for headers and parts of text

Language and style

- In addition to the language specific features of grammar, syntax and style, there are cultural conventions that must be taken into account for localization

- In (US English) an informal style is preferred, the reader is addressed directly, "simple" verbs are used, repetition of parts of text is accepted etc

- Formulating headers (L10N)

- Long compound words (L10N)

- Elements of the user interface(open the File menu -The Open dialog box appears -Click the copy command etc)

Formats

- Date, currency, units of measurement

- Paper format

- Different length of text

a. Consequences for the volume of documents, number of pages, page number (table of contents, index) etc.

b. Also for the size of buttons (IT, machines etc.)

Globalization (G11N)

Posted On at 4:52 PM by Rajeev Prabhakaran- Activities performed for the purpose of marketing a (software) product in regional marketing a (software) product in regional markets

- Goal: Global marketing that accounts for economic and legal factors

- Focus on marketing; total enterprise solutions and to management support

I18N and L10N

Posted On at 4:48 PM by Rajeev PrabhakaranThese two abbreviations mean internationalization and localization respectively. Using the word "internationalization" as an example; here is how these abbreviations are derived. First, you take the first letter of the word you want to abbreviate; in this case the letter "I". Next, you take the last letter in the word; in this case the letter "N". These become the first and last letters in the abbreviation. Finally, you count the remaining letters in the word between the first and last letter. In this case, "nternationalizatio" has 18 characters in it. se we will plug the number 18 between the "I" and "N"; thus I18N

I18N and L10N

- I18N and L10N comprise the whole of the effort involved in enabling a product

- I18N is "Stuff" you have to do once

- L10N is "stuff you have to do over and over again

- The more stuff you push into I18N out of L10N, the less complicated and expensive the process becomes

Internationalization (I18N)

Posted On at 4:46 PM by Rajeev Prabhakaran- Developing a (software) product in such a way that it will be easy to adapt it to other markets (languages and cultures)

- Goal: eliminate the need to reprogram or recompile the original program

- Carried out by SW-Development in conjunction with Localization

- Handing foreign text and data within a program

- Sorting, importing and exporting text and data, correct handing of currency and data and time formats, string parsing, upper and lower case handling

- Separating strings from the source code and making sure that the foreign language string has enough space in your user interface to be displayed correctly

Internationalization

The aspect of development and testing relating to handling foreign text and data within a program, this would include sorting, importing and exporting text and data, correct handling of currency and date and time formats, string parsing, upper and lower case handling, and so forth. It also includes the task of separating strings (or user interface text) from the source code, and making sure that the foreign language strings have enough space in your user interface to be displayed correctly. You could think of internationalization as pertaining of the underlying functionality and workings of your program

Localization (L10N)

Posted On at 4:44 PM by Rajeev Prabhakaran- Adapting a (software) product to a local or regional market

- Goal: Appropriate linguistic and cultural aspects

- Performed by translators, localizers, language engineers

Localization

The aspect of development and testing relating to the translation of the software & the ITE presentation to the end user. This includes translating the program, choosing appropriate icons and graphics, and other cultural considerations. It also may include translating the program's help files and the documentation. You could think of localization as pertaining to the presentation of your program; the things the user sees

Walk-through

Posted On at 4:43 PM by Rajeev PrabhakaranA walk-through is an informal meeting for evaluation or informational purposes. A walk-through is also a process at an abstract level. It's the process of inspecting software code by following paths through the code (as determined by input conditions and choices made along the way). The purpose of code walk-throughs is to ensure the code fits the purpose

Walk-throughs also offer opportunities to assess an individual's or team's competency

Cause of Software Bugs

Posted On at 4:04 PM by Rajeev PrabhakaranIncorrect communication or no communication - as to specifics of what an application should or shouldn't do (the application's requirements)

Software complexity - the complexity of current software applications can be difficult to comprehend for anyone without experience in modern-day software development. Windows-type interfaces, client-server and distributed applications, data communications, enormous relational databases, and sheer size of applications have all contributed to the exponential growth in software/system complexity

Programming errors - programmers, like anyone else, can make mistakes

Changing requirements - the customer may not understand the effects of changes, or may understand and request them anyway - redesign rescheduling of engineers, effects on other projects, work already completed that may have to be redone or thrown out, hardware requirements that may be affected, etc. If there are many minor changes or any major changes, known and unknown dependencies among parts of the project are likely to interact and cause problems, and the complexity of keeping track of changes may result in errors. Enthusiasm of engineering staff may be affected. In some fast-changing business environments, continuously modified requirements may be a fact of life. In this case, management must understand the resulting risks, and QA and test engineers must adapt and plan for continuous extensive testing to keep the inevitable bugs from running out of control

Time pressures - scheduling of software projects is difficult at best, often requiring a lot of guesswork. When deadlines loom and the crunch comes, mistakes will be made.

Poorly documented code - it's tough to maintain and modify code that is badly written or poorly documented; the result is bugs. In many organizations management provides no incentive for programmers to document their code or write clear, understandable code. In fact, it's usually the opposite: they get points mostly for quickly turning out code, and there's job security if nobody else can understand it

Software development tools - visual tools, class libraries, compilers, scripting tools, etc. often introduce their own bugs or are poorly documented, resulting in added bugs

Configuration Management

Posted On at 4:04 PM by Rajeev PrabhakaranConfiguration management covers the processes used to control, coordinate, and track: code, requirements, documentation, problems, change requests, designs, tools/compilers/libraries/patches, changes made to them, and who makes the changes

Verification and Validation

Posted On at 4:01 PM by Rajeev PrabhakaranVerification typically involves reviews and meetings to evaluate documents, plans, code, requirements, and specifications. This can be done with checklists, issues lists, walk through, and inspection meetings. Validation typically involves actual testing and takes place after verifications are completed

Bug Life Cycle

Posted On at 4:00 PM by Rajeev Prabhakaran1. New: When bug rises then it is in the New State

2. Open: Developer Check the Bug Then it is in the Open state

3. Fixed: Developer Fixes the Bug, Then State is fixed

4. Closed: Tester Checks again, if bug is not raised then it is Closed State

5. Reopen: Again It is raised then it is in Reopen State

Difference between Bug and Error

Posted On at 3:58 PM by Rajeev PrabhakaranBug

If the software does not meet the below criteria then it is bug

- Any thing which is not defined by the client

- If Excess Things are added in the software

- Software does not produce a expected result

- it is not user friendly

Error

An Error is a programming mistake, if the function is not responding with expected result then it is error, Bug is any thing which is not added or excess added in to software or it is functionally not well defined and it is not user friendly software. Then errors are also comes under bug

Difference between User Acceptance Testing and System Testing

Posted On at 3:57 PM by Rajeev PrabhakaranUser acceptance Testing is performed by the Client of the application to determine whether the application is developed as per the requirements specified by him/her. It is performed within the development of the organization or at the client site. Alpha testing and Beta testing are the examples of Acceptance Testing

System testing is performed by the Testers to determine whether the application is satisfying the requirements as specified in SRS

Testing Techniques

Posted On at 3:56 PM by Rajeev PrabhakaranWhite Box Testing

- Aims to establish that the code works as designed

- Examines the internal structure and implementation of the program

- Target specific paths through the program

- Needs accurate knowledge of the design, implementation and code

- Aims to establish that the code meets the requirements

- Tends to be applied later in the life cycle

- Mainly aimed at finding deviations in behavior from the specification or requirement

- Causes are inputs, effects are observable outputs

Different Types of Testing

Posted On at 3:33 PM by Rajeev PrabhakaranPerformance testing

a. Performance testing is designed to test run time performance of software within the context of an integrated system. It is not until all systems elements are fully integrated and certified as free of defects the true performance of a system can be ascertained

b. Performance tests are often coupled with stress testing and often require both hardware and software infrastructure. That is, it is necessary to measure resource utilization in an exacting fashion. External instrumentation can monitor intervals, log events. By instrument the system, the tester can uncover situations that lead to degradations and possible system failure

Security testing

If your site requires firewalls, encryption, user authentication, financial transactions, or access to databases with sensitive data, you may need to test these and also test your site's overall protection against unauthorized internal or external access

Exploratory Testing

Often taken to mean a creative, internal software test that is not based on formal test plans or test cases; testers may be learning the software as they test it

Benefits Realization tests

With the increased focus on the value of Business returns obtained from investments in information technology, this type of test or analysis is becoming more critical. The benefits realization test is a test or analysis conducted after an application is moved into production in order to determine whether the application is likely to deliver the original projected benefits. The analysis is usually conducted by the business user or client group who requested the project and results are reported back to executive management

Mutation Testing

Mutation testing is a method for determining if a set of test data or test cases is useful, by deliberately introducing various code changes ('bugs') and retesting with the original test data/cases to determine if the 'bugs' are detected. Proper implementation requires large computational resources

Sanity testing: Typically an initial testing effort to determine if a new software version is performing well enough to accept it for a major testing effort. For example, if the new software is crashing systems every 5 minutes, bogging down systems to a crawl, or destroying databases, the software may not be in a 'sane' enough condition to warrant further testing in its current state

Sanity testing

Typically an initial testing effort to determine if a new software version is performing well enough to accept it for a major testing effort, For example, if the new software is crashing systems every 5 minutes, bogging down systems to a crawl, or destroying databases, the software may not be in a 'sane' enough condition to warrant further testing in its current state

Build Acceptance Tests

Build Acceptance Tests should take less than 2-3 hours to complete (15 minutes is typical). These test cases simply ensure that the application can be built and installed successfully. Other related test cases ensure that Testing received the proper Development Release Document plus other build related information (drop point, etc.). The objective is to determine if further testing is possible. If any Level 1 test case fails, the build is returned to developers un-tested

Smoke Tests

Smoke Tests should be automated and take less than 2-3 hours (20 minutes is typical). These tests cases verify the major functionality a high level. The objective is to determine if further testing is possible. These test cases should emphasize breadth more than depth. All components should be touched, and every major feature should be tested briefly by the Smoke Test. If any Level 2 test case fails, the build is returned to developers un-tested

Bug Regression Testing

Every bug that was “Open” during the previous build, but marked as “Fixed, Needs Re-Testing” for the current build under test, will need to be regressed, or re-tested. Once the smoke test is completed, all resolved bugs need to be regressed. It should take between 5 minutes to 1 hour to regress most bugs

Database Testing

Database testing done manually in real time, it check the data flow between front end back ends. Observing that operations, which are operated on front-end is effected on back-end or not.

The approach is as follows:

While adding a record there' front-end check back-end that addition of record is effected or not. So same for delete, update, Some other database testing checking for mandatory fields, checking for constraints and rules applied on the table , some time check the procedure using SQL Query analyzer

Functional Testing (or) Business functional testing

All the functions in the applications should be tested against the requirements document to ensure that the product conforms with what was specified.(They meet functional requirements)Verifies the crucial business functions are working in the application. Business functions are generally defined in the requirements Document. Each business function has certain rules, which can’t be broken. Whether they applied to the user interface behavior or data behind the applications. Both levels need to be verified. Business functions may span several windows (or) several menu options, so simply testing that all windows and menus can be used is not enough to verify the business functions. You must verify the business functions as discrete units of your testing

* Study SRS

* Identify Unit Functions

* For each unit function

* Take each input function

* Identify Equivalence class

* Form Test cases

* Form Test cases for boundary values

* From Test cases for Error Guessing

* Form Unit function v/s Test cases, Cross Reference Matrix

User Interface Testing (or) structural testing

It verifies whether all the objects of user interface design specifications are met. It examines the spelling of button test, window title test and label test. Checks for the consistency or duplication of accelerator key letters and examines the positions and alignments of window objects

Volume Testing

Testing the applications with voluminous amount of data and see whether the application produces the anticipated results (Boundary value analysis)

Stress Testing

Testing the applications response when there is a scarcity for system resources

Load Testing

It verifies the performance of the server under stress of many clients requesting data at the same time

Installation testing

The tester should install the systems to determine whether installation process is viable or not based on the installation guide

Configuration Testing

The system should be tested to determine it works correctly with appropriate software and hardware configurations

Compatibility Testing

The system should be tested to determine whether it is compatible with other systems (applications) that it needs to interface with

Documentation Testing

It is performed to verify the accuracy and completeness of user documentation

1. This testing is done to verify whether the documented functionality matches the software functionality

2. The documentation is easy to follow, comprehensive and well edited

If the application under test has context sensitive help, it must be verified as part of documentation testing

Recovery/Error Testing

Testing how well a system recovers from crashes, hardware failures, or other catastrophic problems

Comparison Testing

Testing that compares software weaknesses and strengths to competing products

Acceptance Testing

Acceptance testing, which black box is testing, will give the client the opportunity to verify the system functionality and usability prior to the system being moved to production. The acceptance test will be the responsibility of the client; however, it will be conducted with full support from the project team. The Test Team will work with the client to develop the acceptance criteria

Alpha Testing

Testing of an application when development is nearing completion, Minor design changes may still be made as a result of such testing. Alpha Testing is typically performed by end-users or others, not by programmers or testers

Beta Testing

Testing when development and testing are essentially completed and final bugs, problems need to be found before the final release. Beta Testing is typically done by end-users or others, not by programmers or testers

Regression Testing

The objective of regression testing is to ensure software remains intact. A baseline set of data and scripts will be maintained and executed to verify changes introduced during the release have not “undone” any previous code. Expected results from the baseline are compared to results of the software being regression tested. All discrepancies will be highlighted and accounted for, before testing proceeds to the next level

Incremental Integration Testing

Continuous testing of an application as new functionality is recommended. This may require various aspects of an application's functionality be independent enough to work separately before all parts of the program are completed, or that test drivers are developed as needed. This type of testing may be performed by programmers or by testers

Usability Testing

Testing for 'user-friendliness' clearly this is subjective and will depend on the targeted end-user or customer. User interviews, surveys, video recording of user sessions, and other techniques can be used. Programmers and testers are usually not appropriate as usability testers

Integration Testing

Upon completion of unit testing, integration testing, which is black box testing, will begin. The purpose is to ensure distinct components of the application still work in accordance to customer requirements. Test sets will be developed with the express purpose of exercising the interfaces between the components. This activity is to be carried out by the Test Team. Integration test will be termed complete when actual results and expected results are either in line or differences are explainable/acceptable based on client input

System Testing

Upon completion of integration testing, the Test Team will begin system testing. During system testing, which is a black box test, the complete system is configured in a controlled environment to validate its accuracy and completeness in performing the functions as designed. The system test will simulate production in that it will occur in the “production-like” test environment and test all of the functions of the system that will be required in production. The Test Team will complete the system test. Prior to the system test, the unit and integration test results will be reviewed by SQA to ensure all problems have been resolved. It is important for higher level testing efforts to understand unresolved problems from the lower testing levels. System testing is deemed complete when actual results and expected results are either in line or differences are explainable/acceptable based on client input

Parallel/Audit Testing

Testing where the user reconciles the output of the new system to the output of the current system to verify the new

Levels of Testing

Posted On at 1:27 PM by Rajeev Prabhakaran1. Unit Testing

Unit testing is a procedure used to validate that a particular module of source code is working properly. The procedure is to write test cases for all functions and methods so that whenever a change causes a regression, it can be quickly identified and fixed

Benefits

The goal of unit testing is to isolate each part of the program and show that the individual parts are correct. Unit testing provides a strict, written contract that the piece of code must satisfy. As a result, it affords several benefits

a. Facilitates Change

b. Simplifies Integration

c. Documentation

d. Separation of Interface from Implementation

2. Integrated Systems Testing

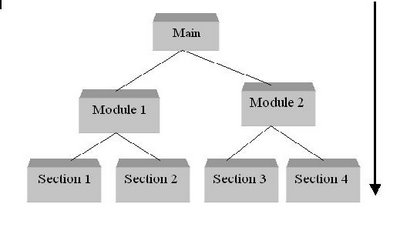

Integrated System Testing (IST) is a systematic technique for validating the construction of the overall Software structure while at the same time conducting tests to uncover errors associated with interfacing. The objective is to take unit tested modules and test the overall Software structure that has been dictated by design. IST can be done either as Top down integration or Bottom up Integration

3. System Testing

System testing is testing conducted on a complete, integrated system to evaluate the system's compliance with its specified requirements. System testing falls within the scope of Black box testing, and as such, should require no knowledge of the inner design of the code

System testing is actually done to the entire system against the Functional Requirement Specifications (FRS) and/or the System Requirement Specification (SRS). Moreover, the System testing is an investigatory testing phase, where the focus is to have almost a destructive attitude and test not only the design, but also the behavior and even the believed expectations of the customer. It is also intended to test up to and beyond the bounds defined in the software/hardware requirements specifications. Remaining All Testing Models comes under System Testing

4. User Acceptance Testing

User Acceptance Testing (UAT) is performed by Users or on behalf of the users to ensure that the Software functions in accordance with the Business Requirement Document. UAT focuses on the following aspects

- All functional requirements are satisfied

- All performance requirements are achieved

- Other requirements like transportability, compatibility, error recovery etc. are satisfied

- Acceptance criteria specified by the user is met

Sample Entry and Exit Criteria for User Acceptance Testing

Entry Criteria

- Integration testing sign off was obtained

- Business requirements have been met or renegotiated with the Business Sponsor or representative

- UAT test scripts are ready for execution

- The testing environment is established

- Security requirements have been documented and necessary user access obtained

Exit Criteria

- UAT has been completed and approved by the user community in a transition meeting

- Change control is managing requested modifications and enhancements

- Business sponsor agrees that known defects do not impact a production release—no remaining defects are rated 3, 2, or 1

Project Roles and Responsibilities

Posted On at 12:44 PM by Rajeev PrabhakaranTesters & Test Lead

Testing, is the core competence of any Testing Organization

- Understand the Application Under Test

- Prepare test strategy

- Assist with preparation of test plan

- Design high-level conditions

- Develop test scripts

- Understand the data involved

- Execute all assigned test cases

- Record defects in the defect tracking system

- Retest fixed defects

- Assist the test leader with his/her duties

- Provide feedback in defect triage

- Automate test scripts

- Understanding of SRS

- Preparation of System Test Plan

- Formation of Test Team

- Schedule Preparation

- Module Allocation

- Reviews on Test Process

- Client Interaction

- Verify Status Reports

- Preparation of Software Requirements Specification (SRS)

- Formation Development Team,Test Team

- Management of requirements through out the project life cycle activities

- Preparation of Detailed Design Document

- Unit Test cases and Integration Test cases

- Guidance on programming and related coding conventions / standards

Software Requirements Specification (SRS) Contents

Posted On at 12:42 PM by Rajeev Prabhakaran1. Introduction

- Purpose

- Scope

- Major Constraints and References

2. Functional Requirements

- Context Diagram

- Functional Decomposition Diagram

- System Functions

- Database Requirements

- User Interface Requirements

- External Software Interface / Compatibility Requirements

- Reports Requirements

- Security Requirements

3. Use Case Specifications

- Acceptance Criteria

Software Development Process

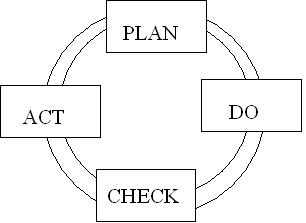

Posted On at 12:37 PM by Rajeev PrabhakaranPlan: Device a Plan

Define your objective and determine the strategy and support methods required to archive that objective

Do: Execute the plan

Create the conditions and perform the necessary training to execute the plan.Make sure every one thoroughly understands the objectives and the plan.

Check: Check the result

Check to determine whether work is progressing according to the plan and whether the expected results are obtained. Check for performance of the set procedures, changes in conditions, or abnormalities that may appear

Act: Take necessary action

If your checkup reveals that the work is not being performed according to plan or that results are not what was anticipated , devise measures for appropriate action.Testing only involves only check component of the plan-do-check-act(PDCA) cycle

Software Life Cycle Models

Posted On at 12:08 PM by Rajeev Prabhakaran1. Prototyping Model of Software Development

A prototype is a toy implementation of a system; usually exhibiting limited functional capabilities, low reliability, and inefficient performance. There are several reasons for developing a prototype. An important purpose is to illustrate the input data formats, messages, reports and the interactive dialogs to the customer. This a valuable mechanism for gaining better understanding of the customer’s needs. Another important use of the prototyping model is that it helps critically examine the technical issues associated with the product development

2. Classic Waterfall Model

In a typical model, a project begins with feasibility analysis. On successfully demonstrating the feasibility of a project, the requirements analysis and project planning begins. The design starts after the requirements analysis is complete, and coding begins after the design is complete. Once the programming is completed, the code is integrated and testing is done. On successful completion of testing, the System is installed. After this, the regular operation and maintenance of the system takes place

3. Spiral Model

Developed by Barry Boehm in 1988. it provides the potential for rapid development of incremental versions of the software. In the spiral model, software is developed in a series of incremental releases. During early iterations , the incremental release might be a paper model or prototype Each iteration consists of Planning, Risk Analysis, Engineering, Construction & Release & Customer Evaluation

Customer Communication

Tasks required to establish effective communication between developer and customer

Planning

Tasks required to define resources, timeliness, and other project related information.

Risk Analysis

Tasks required to assess both technical and management risks.

Engineering

Tasks required to build one or more representatives of the application.

Construction & Release

(Tasks required to construct, test, install and provide user support e.g., documentation and training)

Customer Evaluation

Tasks required to obtain customer feedback based on evaluation of the software representations created during the engineering stage and implemented during the installation state

4. V-Model

Quality Control & Quality Assurance

Posted On at 11:44 AM by Rajeev PrabhakaranQuality Assurance

All those planned and necessary ideas to provide adequate confidence that the product/service will satisfy the given requirements of quality

Quality Control

All those necessary steps taken for fulfilling the requirements of quality

Some Major Computer System Failures Caused by Bugs

Posted On at 11:31 AM by Rajeev Prabhakaran1. In August of 2006 a U.S. government student loan service erroneously made public the personal data of as many as 21,000 borrowers on it's web site, due to a software error. The bug was fixed and the government department subsequently offered to arrange for free credit monitoring services for those affected

2. A September 2006 news report indicated problems with software utilized in a state government's primary election, resulting in periodic unexpected rebooting of voter checking machines, which were separate from the electronic voting machines, and resulted in confusion and delays at voting sites. The problem was reportedly due to insufficient testing

3. Software bugs in a Soviet early-warning monitoring system nearly brought on nuclear war in 1983, according to news reports in early 1999. The software was supposed to filter out false missile detections caused by Soviet satellites picking up sunlight reflections off cloud-tops, but failed to do so. Disaster was averted when a Soviet commander, based on what he said was a '...funny feeling in my gut', decided the apparent missile attack was a false alarm. The filtering software code was rewritten

When to Stop Testing

Posted On at 11:19 AM by Rajeev PrabhakaranIt's difficult to determine exactly when to stop Testing.The following are some of the cases which can help to decide when to reduce/stop Testing

1. Deadlines(Release/Test Deadlines etc)

2. Test cases completed with certain percentage passed

3. Test budget depleted

4. Coverage of code/functionality requirements reaches a specified point

5. Bug rate falls below a certain level

6. Beta or Alpha testing period ends

Inadequately Tested Software Negative Impacts

Posted On at 11:10 AM by Rajeev PrabhakaranIt is difficult to test software products, as evidenced by the problems we find in our software every day. The customer risks of not adequately testing software can be as minor as user inconvenience or as major as loss of life. Not only are there customer (end-user) risks for inadequately tested software, there are also corporate risks. Especially for mature products, an inadequately tested product can have serious negative consequences for a company

Software Testing Axioms

Posted On at 11:08 AM by Rajeev Prabhakaran1. It is impossible to test a program completely

2. Software testing is risk based exercise

3. Testing cannot show that bugs don't exist

4. The more bugs you find, the more bugs there are

5. Not all the bugs you find will be fixed

6. Product specifications are never final

Software Testing

Posted On at 10:45 AM by Rajeev PrabhakaranIntentionally finding errors from the software is called testing. Errors may be functional errors or usability errors or excess functionality which is not defined by client. Simply means testing the Software to find and report defects. It gives an assurance that the end product is as per customer requirement specification

Goals of Software Testing

Quality in software product in terms of functionality, user friendly

Testing Operators

Tester, Test Lead, QA Manager, Project Manager, Developer and Client

Test/QA Team Lead

Coordinates the testing activity, communicates testing status to management and manages the test team

Testers

Develops test script/case and data, script execution, metrics analysis, and evaluates results for system, integration and regression testing

Test Build Manager/System Administrator/Database Administrator

- Delivers current versions of software to the test environment

- Performs installations of application software, and applies patches (both application and operating system)

- Performs set-up, maintenance, and back up of test environment hardware.

Technical Analyst & Test Configuration Manager

- Performs testing assessment and validates system/functional test requirements

- Maintains the test environment, scripts, software, and test data